Overseas malign affect within the U.S. presidential election bought off to a slower begin than in 2016 and 2020 because of the much less contested major season. Russian efforts are targeted on undermining U.S. help for Ukraine whereas China seeks to take advantage of societal polarization and diminish religion in U.S. democratic programs. Moreover, fears that subtle AI deepfake movies would reach voter manipulation haven’t but been borne out however easier “shallow” AI-enhanced and AI audio pretend content material will doubtless have extra success. These insights and evaluation are contained within the second Microsoft Risk Intelligence Election Report printed at present.

Russia deeply invested in undermining US help for Ukraine

Russian affect operations (IO) have picked up steam prior to now two months. The Microsoft Risk Evaluation Heart (MTAC) has tracked at the very least 70 Russian actors engaged in Ukraine-focused disinformation, utilizing conventional and social media and a mixture of covert and overt campaigns.

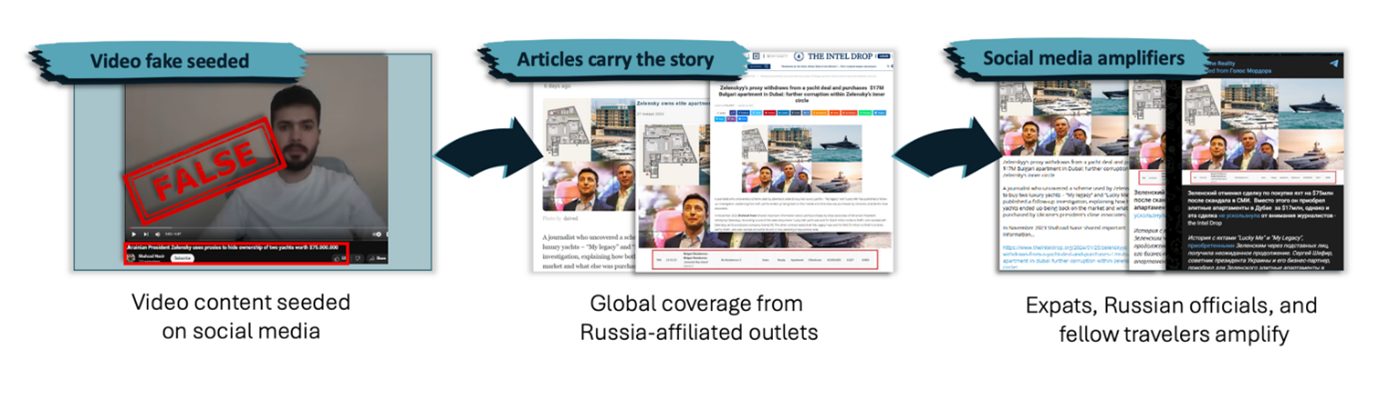

For instance, the actor Microsoft tracks as Storm-1516, has efficiently laundered anti-Ukraine narratives into U.S. audiences utilizing a constant sample throughout a number of languages. Usually, this group follows a three-stage course of:

- A person presents as a whistleblower or citizen journalist, seeding a story on a purpose-built video channel

- The video is then coated by a seemingly unaffiliated world community of covertly managed web sites

- Russian expats, officers, and fellow travellers then amplify this protection.

In the end, U.S. audiences repeat and repost the disinformation doubtless unaware of the unique supply.

China seeks to widen societal divisions and undermine democratic programs

China is utilizing a multi-tiered strategy in its election-focused exercise. It capitalizes on present socio-political divides and aligns its assaults with partisan pursuits to encourage natural circulation.

China’s growing use of AI in election-related affect campaigns is the place it diverges from Russia. Whereas Russia’s use of AI continues to evolve in influence, Folks’s Republic of China (PRC) and Chinese language Communist Celebration (CCP)-linked actors leverage generative AI applied sciences to successfully create and improve photos, memes, and movies.

Restricted exercise so removed from Iran, a frequent late-game spoiler

Iran’s previous conduct suggests it’ll doubtless launch acute cyber-enabled affect operations nearer to U.S. Election Day. Tehran’s election interference technique adopts a definite strategy: combining cyber and affect operations for better influence. The continued battle within the Center East could imply Iran evolves its deliberate objectives and efforts directed on the U.S.

Generative AI in Election 2024 – dangers stay however differ from the anticipated

Because the democratization of generative AI in late 2022, many have feared this know-how shall be used to vary the result of elections. MTAC has labored with a number of groups throughout Microsoft to determine, triage, and analyze malicious nation-state use of generative AI in affect operations.

In brief, using high-production artificial deepfake movies of world leaders and candidates has thus far not induced mass deception and broad-based confusion. The truth is, we’ve seen that audiences usually tend to gravitate in the direction of and share easy digital forgeries, which have been utilized by affect actors over the previous decade. For instance, false information tales with actual information company logos embossed on them.

Audiences do fall for generative AI content material every now and then, although the situations that succeed have appreciable nuance. Our report at present explains how the next elements contribute to generative AI danger to elections in 2024:

- AI-enhanced content material is extra influential than absolutely AI-generated content material

- AI audio is extra impactful than AI video

- Faux content material purporting to return from a personal setting similar to a telephone name is simpler than pretend content material from a public setting, similar to a deepfake video of a world chief

- Disinformation messaging has extra cut-through throughout instances of disaster and breaking information

- Impersonations of lesser-known folks work higher than impersonations of very well-known folks similar to world leaders

Main as much as Election Day, MTAC will proceed figuring out and analyzing malicious generative AI use and can replace our evaluation incrementally, as we count on Russia, Iran, and China will all improve the tempo of affect and interference exercise as November approaches. One caveat of vital be aware: If there’s a subtle deepfake launched to affect the election in November, the instrument used to make the manipulation has doubtless not but entered {the marketplace}. Video, audio, and picture AI instruments of accelerating sophistication enter the market practically day by day. The above evaluation arises from what MTAC has noticed to this point, however it’s tough to know what we’ll observe from generative AI between now and November.

The above evaluation arises from what MTAC has noticed to this point, however as each generative AI and geopolitical objectives from Russia, Iran, and China evolve between now and November, dangers to the 2024 election could shift with time.